Adapting data center technologies for AI

The market for data center capacity has skyrocketed, driven by burgeoning sectors like those in Atlanta and Portland. Simultaneously, the industry grapples with sustainability concerns, balancing customer needs with environmental responsibility. This article discusses critical considerations for businesses to enhance and broaden their data center capabilities, focusing on artificial intelligence and machine learning.

AI’s impact on data center technologies: A market shift

AI’s influence on data center technologies is profound, initially propelled by service-centric companies offering AI functionalities. Now, a growing number of corporations are exploring ways to facilitate their burgeoning projects and initiatives. Large-scale GPUs consume substantial capacity, and their growth is continuous. With AI and machine learning becoming commonplace, demand exceeds supply, altering the dynamics of supply and demand as these applications materialize.

Liquid cooling for high-density workloads

As the market progresses, it’s clear that the trend is leaning towards liquid cooling for high-density workloads. This change is necessitated by the need to support advanced infrastructure and the escalating demand for efficient cooling solutions. Unlike traditional air cooling, liquid cooling employs a closed-loop system to deliver coolant to the environment. This system comprises a coolant distribution unit that circulates the coolant to each cabinet in the data center. The coolant cools the equipment, typically the chip, before it is directed back to the facility cooling units. This mechanism is not only efficient but also curbs the power consumed by the equipment, reducing the need for fan operation.

Learn More: Download the Liquid Cooling Applications Guide

Balancing workloads and cooling solutions

Workloads vary, and it’s vital to pair the appropriate data center cooling capacity and utility with the right kind of workload. For instance, large language models can operate in remote areas with ample power since they don’t need proximity to users. Conversely, inference workloads require placement closer to users for low-latency applications. While supporting these workloads, traditional enterprise workloads that are still evolving must also be considered, ensuring connectivity to all available hybrid and multi-cloud options.

The swift emergence of AI and large language models has led to the market needing to supply and support these new workloads. Yet traditional workloads remain constant, leading to competition for supply between large AI workloads and evolving traditional enterprise workloads. This competition necessitates considerations around power for future growth, sustainability objectives, and the evolution of these workloads.

Exploring advanced cooling technologies

While liquid cooling is gaining popularity, it’s not the sole solution under consideration. Immersion cooling, despite being more common in R&D than mainstream workloads due to its high density and management challenges, is a topic of intense discussion. Despite these hurdles, data center cooling innovation continues unabated, potentially making immersion cooling a future standard.

Evaluating power delivery and efficiency

The escalating power of GPUs and the surging demand for high-density workloads necessitate more efficient cooling solutions. Consequently, businesses must weigh numerous factors when deciding workload placement, such as the data center’s location, available utilities, and long-term capabilities. It’s also crucial to consider the workload’s specific requirements and the desired business outcomes.

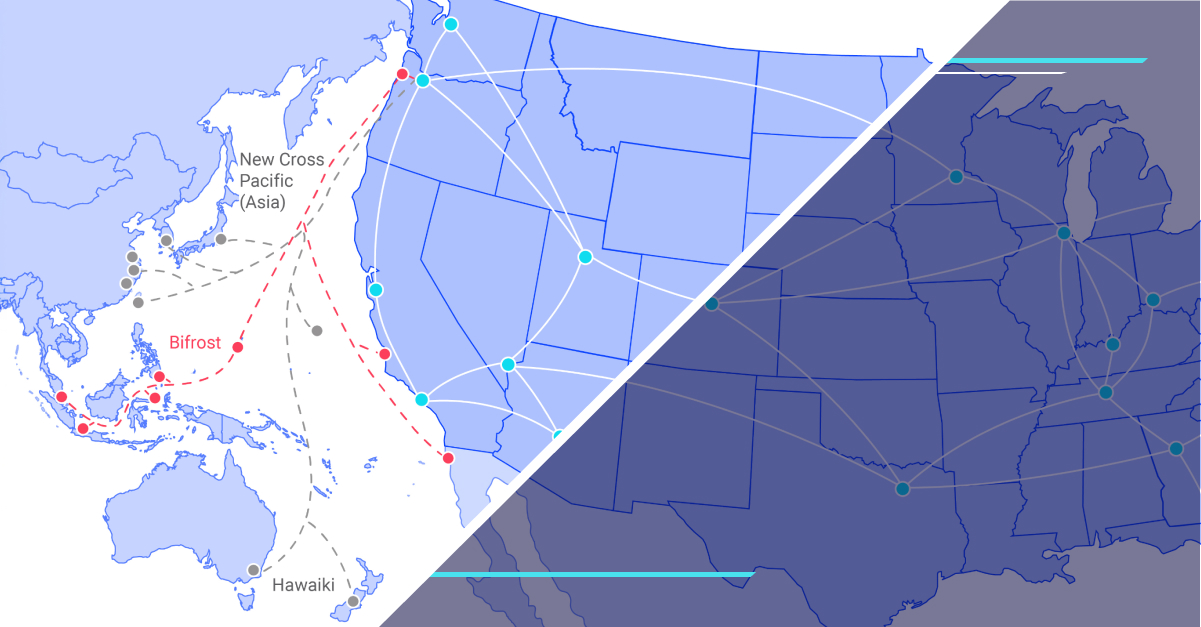

The importance of interconnection

In addition to cooling needs, businesses must also evaluate power delivery and efficiency. The discussion has transitioned from 120 versus 208 volts to considerations around 415 volts. The cost of equipment, especially GPUs, which range from $30,000 to $50,000 per GPU, is another significant factor making the system’s design and redundancy paramount. Lastly, interconnection is a vital component of any data center strategy. As workloads become more complex and the need for different platforms to interact increases, the importance of interconnection grows. As the industry evolves, businesses must stay ahead to leverage new technologies and avoid being left behind.

Conclusion

The data center industry is currently navigating an exhilarating yet demanding period. The swift progression of AI and machine learning is triggering considerable shifts in data center technologies, necessitating a reevaluation of existing strategies. As a data center provider, we pledge to offer cost-effective and environmentally friendly solutions to address our clients' needs in this dynamic landscape. By focusing on catering to the varying needs of AI-driven and conventional enterprise workloads, we stand prepared to embrace the future of data center technologies.

Want to dive deeper into innovative cooling and energy solutions for high-density data centers?

Join us for our latest webinar: The power to perform: Innovative cooling and energy solutions for high-density data centers. Learn from industry experts Sherri Harrell, SVP Product Management, and Craig Cook, SVP Solutions Architecture & Engineering, as they explore cutting-edge cooling technologies and strategies. Don’t miss out on this opportunity to enhance your data center’s performance and sustainability.